On Ghost Engineers And Proof or a Lack thereof

I've been watching the media landscape evolve in real time and I feel compelled to share my opinion. I'm not a researcher or journalist, but I have been in Engineering for a long time. I don't intend for this to be an attack on anyone (hopefully it doesn't come across that way) but I think there is some fair criticism to be levied that I will do my best to break down[1].

If you've been plugged into the dev sphere over the past few weeks, you may have come across a viral thread authored by a Stanford researcher talking about "ghost engineers"[2] which claims that 9.5% of engineers at major tech companies are essentially doing nothing while collecting substantial salaries. It's been cited by news outlets like Forbes[3], Business Insider[4], Benzinga[5], 404 Media[6], and some tech-focused outlets including ITPro[7] and DevOps.com[8].

The thread makes many claims that are supported by infographics and charts. However, the author does not provide any raw data or methodology for how he arrived at his conclusions. The author does reference a research paper containing some related research[9]; however, the paper does not directly address the claims made in the thread. Instead, the paper focuses on training models to predict evaluations of code reviews, which they presumably use as a proxy for measuring productivity.

Predicting Code Reviews

The paper attempts to create a model that can predict evaluations of code reviews made by a panel of human experts. They sourced commercial git commit data by inviting organizations to participate in their research and supplementing that data with public git repositories.

To accurately reflect real-world software development, we collected commits from private commercial repositories by using LinkedIn to invite businesses to connect their Git repositories. We also included commits from public repositories, using a ratio of one public commit for every five private commits.

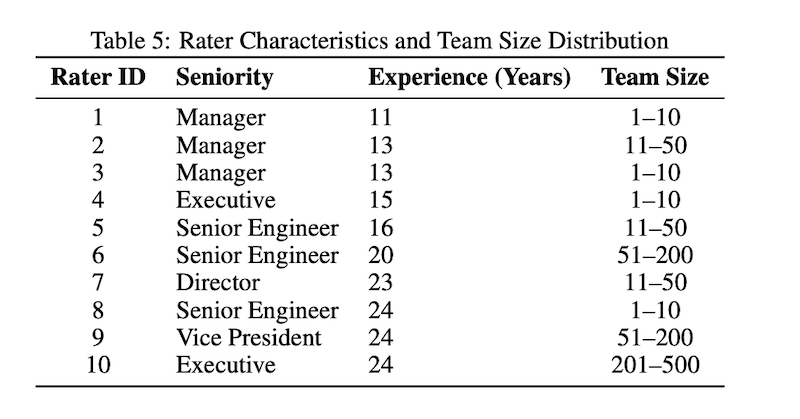

The paper goes on to explain that their model was calibrated against the judgments of exactly 10 experienced Java developers who were responsible for annotating code reviews.

To ensure familiarity with software development practices, we chose 10 Java experts as raters with more than 10 years of direct coding experience, including senior developers, tech leads, and architects.

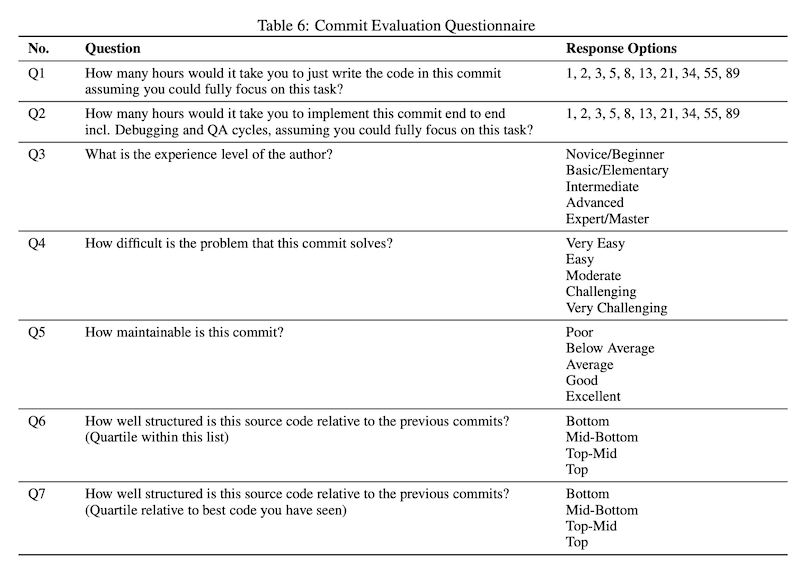

A dataset of commits was selected for labeling by the experts who were provided with a link to the changeset and 7 questions including:

- How many hours would it take you to just write the code in this commit assuming you could fully focus on this task?

- How many hours would it take you to implement this commit end to end incl. Debugging and QA cycles, assuming you could fully focus on this task?

- What is the experience level of the author?

What this does not take into account is the information asymmetry that exists between the reviewer and the author of the code. A massive amount of work is usually spent figuring out necessary context, consulting with the team on the best way to implement a feature, trial and error, etc. The paper doesn't address a possible ego component. Anecdotally, people rarely accurately assess their own skill level. Think of how often you've heard someone say, "That was more difficult than I thought" after saying - "It's going to be easy".

Additionally, things generally seem easier with the benefit of hindsight. So intuitively I'd expect the evaluators to frequently misestimate questions that quantify the work done in hours because software estimation is hard[10] and they are offering their best guess given the information they have. However, the paper does not mention validating assumptions made by the experts. If trained on this data, the view the model would have is a backward-looking effort assessment of how much effort a given task should have taken given perfect information about the solution.

Looking at the composition of the raters leaves me with even more questions:

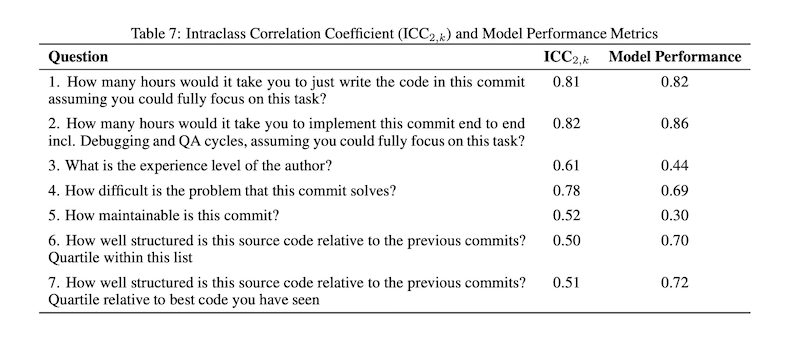

Only 3 of the 10 raters have job titles that presumably require them to write code every day. The remaining 7 raters are managers, directors, and executives. Now this contextualizes some of the results that were included in the paper. For example, if we consider the Interclass Correlation Coefficient \(ICC_{2,k}\) which measures how much agreement there is between different raters, the most highly correlated results saw that raters agreed 82% of the time for the question "How many hours would it take you to implement this commit end to end incl. Debugging and QA cycles, assuming you could fully focus on this task?"

However no error bars or confidence intervals were provided which would allow us to understand the variability of the results. The experts may have all scored the same commit the same way, or they may have had completely different views of the problems - the managers and executives may have had completely different views of the problems than the engineers, or even the engineers may have had wildly contrasting views due to some underlying factors like the experience level of the rater with the problem domain. Given that no raw data, error bars, or confidence intervals were provided, as readers, we're only left to speculate.

Additionally, if we consider the remaining \(ICC_{2,k}\) values, the author acknowledges that the raters frequently disagreed with each other but presents the model correlations trained on the sample unstable data as accurate.

However, lower rater agreement on author experience, code maintainability, and structure—reflected by lower ICC2,k values—influences the model’s predictive accuracy. If human judgments are inconsistent, models struggle to predict them. While our model excels in estimating time and complexity, areas with less rater consensus highlight current limitations in automated assessment. Nonetheless, the model’s strong performance in effort metrics and its alignment with expert judgments suggests it can offer fast, reliable, and accurate evaluations of software engineering output, potentially useful for measuring both individual and team performance.[9:1]

In other words, if most of your raters disagree and a model is trained on those results, the model becomes just another unstable voice in the room.

What do Ghost Engineers do?

Ok, so this is where we talk about the Twitter thread. The author mentions an expanded dataset and references a methodology similar to the paper discussed above[2:1].

We have data on the performance of >50k engineers from 100s of companies.

...

Our model quantifies productivity by analyzing source code from private Git repos, simulating a panel of 10 experts evaluating each commit across multiple dimensions.[2:2]

Again, no raw data or evidence is provided. The author merely asserts that that their model is accurate and that based on their dataset 9.5% of engineers do virtually nothing followed by advocating for companies to lay off 10% of their engineering workforce. Ignoring the potential for selection bias in how companies were sourced and invited into the program.

~9.5% of software engineers do virtually nothing: Ghost Engineers (0.1x-ers)

...

If each company adds these savings to its bottom line (assuming no extra expenses), the market cap impact of 12 companies laying off unproductive engineers is $465B–with no decrease in performance!

...

Why does this matter?It’s insane that ~9.5% of software engineers do almost nothing while collecting paychecks.

This unfairly burdens teams, wastes company resources, blocks jobs for others, and limits humanity’s progress.

It has to stop.[2:3]

If we consider that these claims may have been made on a weak foundation or flawed methodology, I argue that making such claims without providing any proof only serves to send teams down a path chasing metaphorical ghosts. I'm happy to be proven wrong, but I haven't seen any evidence to support these claims beyond anecdotes.

At this point, if all that is being offered are anecdotes, I want to recommend a few anecdotes that may offer an explanation for "Ghost Engineer" behavior.

I had a developer on my team that was very mediocre and all he could do was write reports. But he was happy doing it. When layoffs came, he got let go and then the remaining (higher performing) developers had to take over writing reports. One by one they got bored and quit. The company lost a couple rock star developers because they fired the underperformer.[11]

I've seen this play out firsthand. An engineer (not necessarily a low performer) finds their place on the team by doing the things that no one else wants to do. In some organizations like highly regulated ones, it could be the mundane work of compiling compliance documentation or similar work, being on calls with clients or vendors trying to work out the details of some APIs or hardware, working with designers or product managers to make sure the requirements are clear and complete, etc. There are thousands of ways engineers can contribute to a team that don't end up in a git repository or PR.

Joe Levy (CEO of UpLevel) also shared a great anecdote on LinkedIn[12]

The conversation has sparked debates on "quiet quitting," but here's the real issue: it’s not about lazy engineers—it’s about broken systems.

At Uplevel, we see this all the time:

⚠️ Engineers blocked by unclear specs, constant meetings, and inefficiencies.

⚠️ Leaders forced to rely on outdated metrics like lines of code to gauge productivity.

⚠️ Teams stuck in a cycle of reactive work, unable to focus on meaningful impact.The solution isn’t to squeeze more out of already burnt-out teams. It’s to rethink how we measure and manage productivity:

✅ Focus on flow state: Engineers thrive when they can focus on deep work.

✅ Track outcomes, not activity: It’s not about how much work, but the value created.

✅ Use data to unblock teams: Analytics can reveal inefficiencies before they spiral.It’s time to stop pointing fingers and start fixing the system. Let’s build environments where engineers can thrive and drive real results.

Alberta Tech made a great YouTube video examining these claims and offering her own experience and perspectives[13].

In the end, it's hard to offer a full critique of the claims because no data was provided to examine. We're just comparing anecdotes. I'm not saying that these people don't exist in some capacity, but "trust me bro"[14] is not a good argument to support this claim.

How the media reacted

I read through 5 articles that directly commented on claims made in the thread.

- As many as one in 10 coders are 'ghost engineers,' Stanford researcher says, lurking online and doing no work - Business Insider

- 'Ghost Engineers' – Stanford Researcher Reveals That Almost 1 In 10 Software Engineers 'Do Virtually Nothing,' Costing Companies Millions - Benzinga via Yahoo News

- Code Busters: Are Ghost Engineers Haunting DevOps Productivity? - DevOps.com

- Are ‘ghost engineers’ stunting productivity in software development? Researchers claim nearly 10% of engineers do "virtually nothing" and are a drain on enterprises - ITPro

- Are Overemployed ‘Ghost Engineers’ Making Six Figures to Do Nothing? - 404 Media

Many of them highlight some limitations of the work but they all lack an explicit demand for proof. For example, Business Insider accurately points out some limitations of the research[4:1]

Denisov-Blanch's research hasn't been peer-reviewed.

There are other caveats. Industry-wide, the 9.5% figure could be an overstatement because Denisov-Blanch's research team ran the algorithm only on companies that volunteered to participate in the study, introducing selection bias.

Conversely, while Denisov-Blanch's team didn't classify employees whose output is only 11% or 12% of the median engineer's as "ghost engineers," there's a strong argument that those employees aren't contributing much either, which could mean the 9.5% figure is an understatement.

404 Media also points out that the work has not been peer-reviewed [6:1]

The Stanford research has not yet been published in any form outside of a few graphs Denisov-Blanch shared on Twitter. It has not been peer reviewed. But the fact that this sort of analysis is being done at all shows how much tech companies have become focused on the idea of “overemployment,” where people work multiple full-time jobs without the knowledge of their employers and its focus on getting workers to return to the office. Alongside Denisov-Blanch’s project, there has been an incredible amount of investment in worker surveillance tools. (Whether a ~9.5 percent rate of workers not being effective is high is hard to say; it's unclear what percentage of workers overall are ineffective, or what other industry's numbers look like).

While a lot of the language is appropriately reporting language given that they acknowledged that the research had not been peer-reviewed, it's unfortunate that none of them (at least as of the time of writing) demanded proof or sourced a second opinion. I'm unsure if that is standard practice or expected in journalism but speaking as a lay person if I was handed claims deemed controversial, I'd at least be looking for a trusted source in the industry to give me some secondary opinion. If anyone has some good insights on this do share in the comments or some other platform.

Conclusion

Maybe I've just been living in my own bubble for too long, but it's disappointing to realize that a single tweet thread is enough evidence for many, particularly news outlets to take claims at face value. To be clear, I'm not anti-research or advocating for a lack of productivity in fact I hope to see the full results of the author's research when it is ready for publication.

However, as things stand today, I believe that extraordinary claims require extraordinary evidence and I wish more people would hold others to that standard. But I guess "Stanford Researcher Claims That 1 In 10 Software Engineers Do Nothing without Providing Evidence" is not a catchy enough headline these days.

To reiterate, I'm not a researcher or journalist. I'm just another dev on the internet. I can and will be wrong ever so often. Take my opinions with a grain of salt. ↩︎

(no date) x.com. x.com. Available at: https://x.com/yegordb/status/1859290734257635439 (Accessed: 2024-12-8). ↩︎ ↩︎ ↩︎ ↩︎

Predin, JM. (2024) The great tech wake-up call: VCs discover billions wasted on inefficient engineering teams. www.forbes.com. Available at: https://www.forbes.com/sites/josipamajic/2024/11/27/the-great-tech-wake-up-call-vcs-discover-billions-in-inefficient-engineering-teams/ (Accessed: 2024-12-8). ↩︎

Long, K. (2024) As many as one in 10 coders are 'ghost engineers,' Stanford researcher says, lurking online and doing no work. Available at: https://www.businessinsider.com/tech-companies-ghost-engineers-stanford-underperformers-coding-2024-11 (Accessed: 2024-12-8). ↩︎ ↩︎

(no date) finance.yahoo.com. Available at: https://finance.yahoo.com/news/ghost-engineers-stanford-researcher-reveals-141518614.html (Accessed: 2024-12-8). ↩︎

Koebler, J. (2024) Are overemployed ‘ghost engineers’ making six figures to do nothing?. www.404media.co. Available at: https://www.404media.co/are-overemployed-ghost-engineers-making-six-figures-to-do-nothing/ (Accessed: 2024-12-8). ↩︎ ↩︎

Fitzmaurice, G. (2024) Are ‘ghost engineers’ stunting productivity in software development? Researchers claim nearly 10% of engineers do "virtually nothing" and are a drain on enterprises. www.itpro.com. Available at: https://www.itpro.com/software/development/are-ghost-engineers-stunting-productivity-in-software-development (Accessed: 2024-12-8). ↩︎

Bridgwater, A. (2024) Code busters: Are ghost engineers haunting DevOps productivity?. devops.com. Available at: https://devops.com/code-busters-are-ghost-engineers-haunting-devops-productivity/ (Accessed: 2024-12-8). ↩︎

Denisov-Blanch, Y. et al. (2024) Predicting expert evaluations in software code reviews. Available at: https://arxiv.org/pdf/2409.15152.pdf ↩︎ ↩︎

Duvall, J. (2022) Software engineering estimates are garbage. www.infoworld.com. Available at: https://www.infoworld.com/article/2335665/software-engineering-estimates-are-garbage.html (Accessed: 2024-12-8). ↩︎

(no date) Stanford research reveals 9.5% of software engineers 'do virtually nothing' - Slashdot. developers.slashdot.org. Available at: https://developers.slashdot.org/comments.pl?sid=23532517&cid=64973865 (Accessed: 2024-12-8). ↩︎

(no date) Joe Levy on LinkedIn: A viral tweet just exposed a hard truth: many engineers at top companies…. www.linkedin.com. Available at: https://www.linkedin.com/posts/mrjoelevy_a-viral-tweet-just-exposed-a-hard-truth-activity-7270113050051911681-QFed (Accessed: 2024-12-8). ↩︎

Alberta Tech (no date) Do 10% of developers do nothing? youtu.be. Available at: https://youtu.be/_a_Vz2ytVCQ?si=Y7H5Udv4DmwcDHfE (Accessed: 2024-12-9). ↩︎

Gideov1212 (2022) Source Trust Me Bro. www.urbandictionary.com. Available at: https://www.urbandictionary.com/define.php?term=Source%3A+Trust+me+bro (Accessed: 2024-12-9). ↩︎