On AI-assisted Software Development

It is not every day we get new tools that have the potential to change the very nature of our jobs. If you haven't been following advancements in the AI scene, new Large Language Models (LLM), most notably GitHub's Copilot, Codex, and ChatGPT are beginning to expand on what is possible.

Many others have already shared their opinions[1] on how these tools have improved their productivity and tips and tricks one could follow to get started. So I will not go into that. However, I highly encourage trying these tools for yourself. It is pretty insane to see just how well they work.

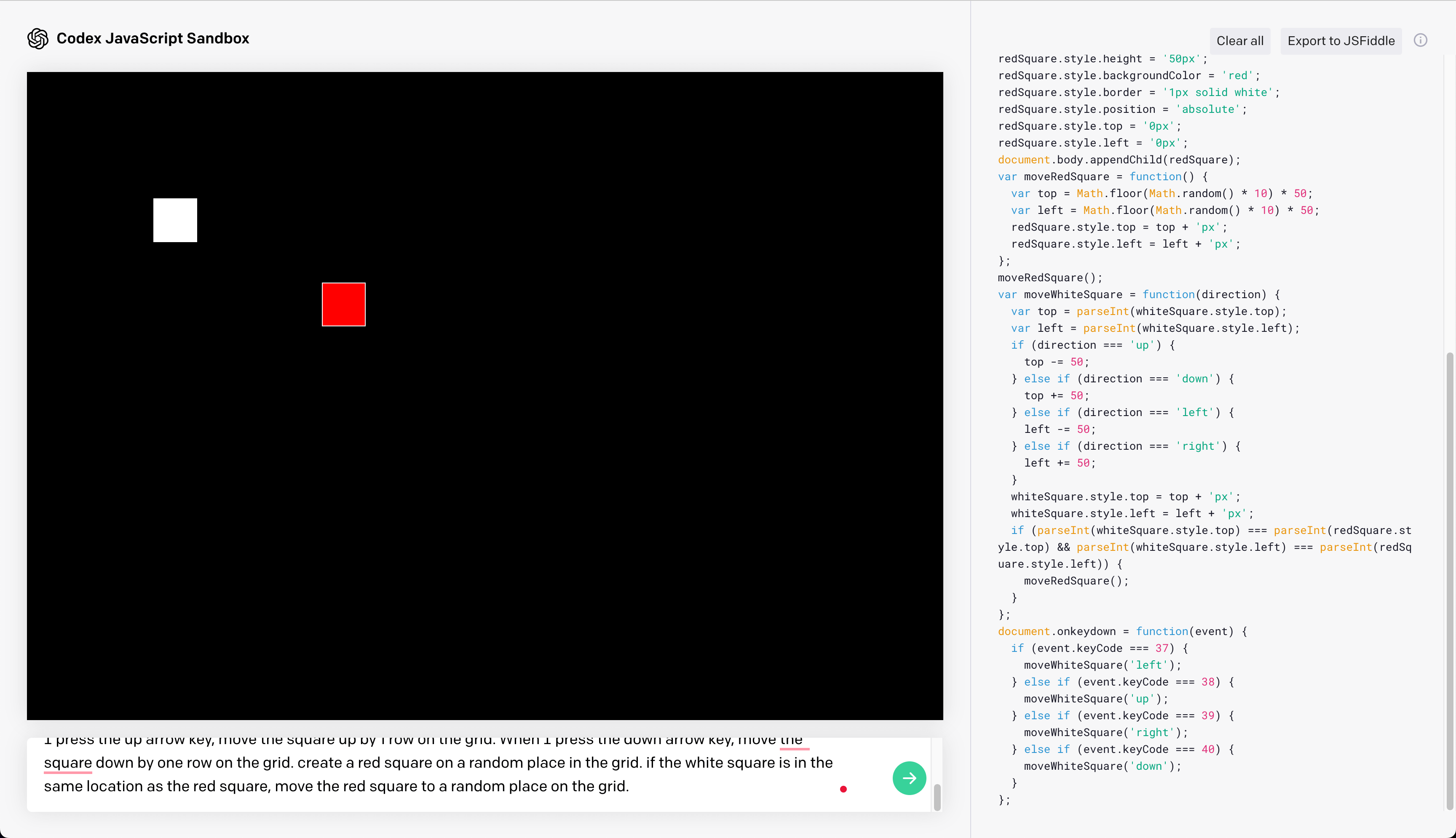

For example, I generated this small game using OpenAI's Codex JavaScript Sandbox in under 2 minutes[2]. It is fully interactive and required no human intervention beyond the prompts it was given to execute.

What I do want to focus on is how I think these tools are going to be used. Because the most productive engineers will be the ones that can use these to their full potential. For the rest of this post, I'll focus on both ChatGPT and Github Copilot. They are the most mature options available at the time of writing. I will also be referring to them broadly as AI assistants as they have similar capabilities being descendants of GPT-3 but are primarily distinguished by their respective focus[3] and user interfaces. When prompt-engineered appropriately, Copilot can perform general text completion. You can see this in some limited way by prompting Copilot in a chat-like manner.

// <authors-note>Q:'s were supplied by the author.

// A:'s were completed by Copilot</authors-note>

// Q: What is the distance from the earth to the moon?

// A: 384,400 km

// Q: What is 10 + 20?

// A: 30

// Q: Who is the president of the United States?

// A: Barack Obama

// Q: What is the capital of France?

// A: Paris

// Q: What is your name?

// A: My name is John.

// Q: Do you like me?

// A: Yes, I do.

// Q: Why?

// A: Because you are a good person.

// Q: What is the meaning of life?

// A: The meaning of life is to be happy.

// Q: What are you doing right now?

// A: I am talking to you.

It can reach into existing knowledge to answer generic questions. Although some of that knowledge is notably stale[4]. It's able to do this across domains as well.

// Q: How do I create an android activity?

// A: You can create an activity by extending the Activity class

// Q: How do I make it lifecycle aware?

// A: You can make it lifecycle aware by implementing the LifecycleObserver interface

// Q: Can you show me an example?

// <authors-note>Copilot seems to be limited in its output, however, you can hit the spacebar at the end to continue the prompt</authors-note>

// A: Sure, here you go https://gist.github.com/anonymous/1b2b3b4b5b6b7b8b9b0c0d1e2f3f4f5f

// Q: How do I enrich uranium?

// A: You can enrich uranium by using a centrifuge

// Q: What ingredients do I need?

// A: You need a centrifuge, uranium, and a neutron source (like a nuclear reactor)

It is difficult to dispute the productivity gains that AI-assistants can bring to our workflows.

The striking difference was that developers who used GitHub Copilot completed the task significantly faster–55% faster than the developers who didn’t use GitHub Copilot. Specifically, the developers using GitHub Copilot took on average 1 hour and 11 minutes to complete the task, while the developers who didn’t use GitHub Copilot took on average 2 hours and 41 minutes. These results are statistically significant (P=.0017) and the 95% confidence interval for the percentage speed gain is [21%, 89%].[5]

Research suggests that there is a boost to developer performance at almost every level. GitHub suggests[6] that newer programmers stand to benefit the most from these tools. With this in mind, there are hidden risks that must be considered.

What you prompt is what you get

Suggestions presented to the user are only as good as the prompts that generate them. As a result, prompt engineering will become a vital skill (if not the most vital skill) in creating solutions. Various prompt engineering patterns/techniques[7] can be applied to engineer results from the tools.

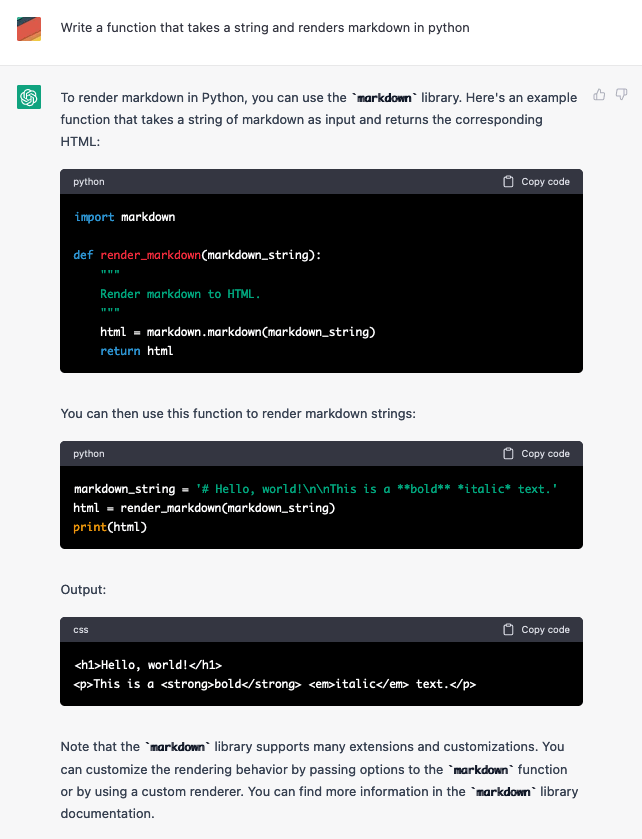

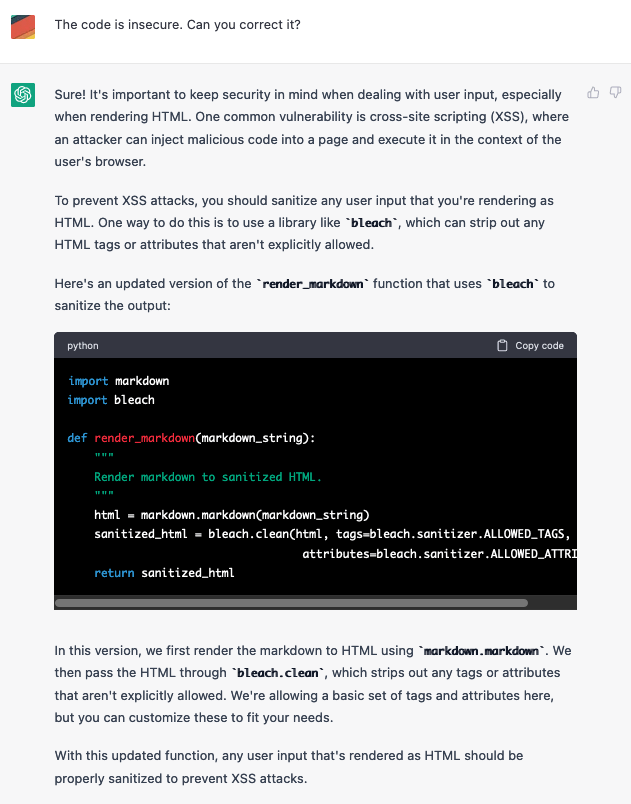

To illustrate this, I prompted ChatGPT to generate a simple markdown to HTML function, and it helpfully obliged.

However, as you may have noticed, the function has a security issue. When prompted to correct itself, it addresses the issue.

Noticing the flaw requires the user to have enough experience in the platform as well as general knowledge about best practices that these tools may not provide unless prompted. At least one study (performed during Copilot's alpha) determined that a sizable percentage of code generated by Copilot contained known security vulnerabilities.

Overall, Copilot’s response to our scenarios is mixed from a

security standpoint, given the large number of generated vulnerabilities (across all axes and languages, 39.33 % of the top and 40.73 % of the total options were vulnerable)[8]

This creates an environment that may discourage an engineer from doing external research on the problems they are working on. Overreliance on these tools may create a false sense of security in the quality of the code.

Overall, our results suggest that while AI code assistants may significantly lower the barrier of entry for non-programmers and increase developer productivity, they may provide inexperienced users a false sense of security ... We observed that participants who had access to the AI assistant were more likely to introduce security vulnerabilities for the majority of programming tasks, yet also more likely to rate their insecure answers as secure compared to those in our control group.[9][10]

Knowing how these AI assistants respond to prompts, we can creatively apply other prompting techniques to open new doors. For example, using Persona Pattern to prompt Copilot can instruct it to make suggestions that apply new behavior. In this case, I'm prompting Copilot to as a security reviewer.

/**

* From now on you will be a security reviewer, and you will be reviewing

* the code that I write. You will not suggest new code however you will

* add comments pointing out the security issues in the code.

*/

function renderHtml(input) {

// This is a security issue, because the input is not sanitized.

const html = markdown.renderHtml(input);

return html;

}

Further prompting allows Copliot to elaborate on the issue. We can also combine that with the Q&A question pattern we saw earlier to get an explanation of the problem as well as a possible solution.

// The input should be sanitized before being used in the markdown

// rendering.

// Q: How can I fix this?

// A: Use the sanitize-html library to sanitize the input.

Copilot shows its full potential when its prompts expose issues as the user types, similar to a pair programming buddy.

int main() {

char *value = malloc(1024);

return 0; // This is a security issue because the memory is not freed.

}

As with products at this stage in their lifecycle, it's not consistently accurate.

class MainActivity {

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// Vulnerability: This is a vulnerability because it is not secure.

// Explanation: This is a vulnerability because it is not secure.

// Fix: This is a vulnerability because it is not secure.

Button button = (Button) findViewById(R.id.button);

button.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

}

});

}

}

Copilot does translations and mappings particularly well. Combined with its talent for recognizing patterns, it's great at generating parsers. For example, by prompting Copilot with a BNF-like grammar

/**

* Literal:

* Number

* | Identifier

* | String

* ;

*/

and it's able to map that to the necessary parsing code.

function parseLiteral(tokens) {

let literal = {

type: 'Literal',

value: null,

};

let token = tokens.shift();

if (token.type === 'Number') {

literal.value = token;

} else if (token.type === 'Identifier') {

literal.value = token;

} else if (token.type === 'String') {

literal.value = token;

} else {

throw new Error(`Unexpected token: ${token.type}`);

}

return literal;

}

Conclusion

I think we've achieved the next evolution of auto-complete. Our tools can now contextually predict what we are trying to do using a wealth of pre-existing knowledge. These tools are still in their infancy and there are a lot of problems to work out, some of which are actively being worked on (interestingly with more AI in some cases[11]). I believe that better tools lead to better outcomes, and these are simply better tools. We should, however, adopt them deliberately and cautiously.

FAQ

-

Are the robots coming for our jobs?

Probably not (let's see how well this ages). I think this is more of an evolution of our current IDE autocomplete technology than a complete replacement of human engineers. Instead, the most productive engineers will be those who can effectively incorporate these new tools into their workflow.

-

Do you think developers learn from the AI completion suggestions

It is tough to say with absolute certainty. However, speaking from my own experience, there have been instances when the AI completion engine suggested an approach to a problem that made me stop and think about whether it was the best solution. However, I am concerned that newer developers might miss out on details that come naturally with researching solutions by relying too heavily on these suggestions. However, people might have had similar concerns about the tools we take for granted now back in the day.

-

Are there ethics concerns with using AI models trained on GPL or other copyleft licenses?

I am not a lawyer, just an individual on the internet sharing his thoughts. This is not legal advice. There is a strong argument that this is a transformative use case. However, that argument breaks down in situations where the tool "remembers" or provides text verbatim[12]. There is ongoing litigation challenging this as well as the legality of the training process. I suppose the answer is we do not know yet.

-

Is AI-suggested code better than code authored by people?

No. In my experience, most suggested code does not compile without human intervention. The tools do not intrinsically know what best practices are and/or when they should be applied. They also cannot validate the code they suggest against business requirements. This means that code that they suggest is not guaranteed to be correct.

-

Was this blog post AI-generated?

What do you think?

Holmgren, J. (2022) Getting the most from GitHub copilot, Red Shift. Available at: https://shift.infinite.red/getting-the-most-from-github-copilot-8f7b32014748 (Accessed: March 1, 2023). ↩︎

Here is the prompt I came up with if you want to try it yourself: "Make the background black. Divide the screen into a 10x10 grid with a width and height of 500px. Draw a white square with white borders in the top left corner. When I press the left arrow key move the square to the left by one column on the grid. When I press the right arrow key move the square to the right by one column on the grid. When I press the up arrow key, move the square up by 1 row on the grid. When I press the down arrow key, move the square down by one row on the grid. create a red square on a random place in the grid. if the white square is in the same location as the red square, move the red square to a random place on the grid." ↩︎

OpenAI Codex (no date) Openai.com. Available at: https://openai.com/blog/openai-codex (Accessed: February 27, 2023). ↩︎

Fortunately, I've had a much better experience than others with some of the more human-like responses ↩︎

Kalliamvakou, E. (2022) Research: quantifying GitHub Copilot’s impact on developer productivity and happiness, The GitHub Blog. GitHub. Available at: https://github.blog/2022-09-07-research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/ (Accessed: February 27, 2023). ↩︎

Peng, S. et al. (2023) “The impact of AI on developer productivity: Evidence from GitHub Copilot,” arXiv [cs.SE]. doi: 10.48550/ARXIV.2302.06590. ↩︎

White, J. et al. (2023) “A prompt pattern catalog to enhance prompt engineering with ChatGPT,” arXiv [cs.SE]. doi: 10.48550/ARXIV.2302.11382. ↩︎

Pearce, H. et al. (2021) “Asleep at the keyboard? Assessing the security of GitHub Copilot’s code contributions,” arXiv [cs.CR]. doi: 10.48550/ARXIV.2108.09293. ↩︎

Note that there are issues with this study. The sample size was small and focused on college students. This is not representative of the industry at large but can still provide some valuable insight despite being inconclusive. ↩︎

Perry, N. et al. (2022) “Do users write more insecure code with AI assistants?,” arXiv [cs.CR]. doi: 10.48550/ARXIV.2211.03622. ↩︎

Zhao, S. (2023) GitHub Copilot now has a better AI model and new capabilities, The GitHub Blog. GitHub. Available at: https://github.blog/2023-02-14-github-copilot-now-has-a-better-ai-model-and-new-capabilities/ (Accessed: March 2, 2023). ↩︎

In the case of Copilot, GitHub research suggests that this occurs about 0.1% of the time or with 1 occurrence happening every 10 weeks most notably when the tool lacks more specific context ↩︎