Notes on the phi-4-reasoning Technical Paper

Phi-4 Reasoning

Microsoft recently released the phi-4 reasoning model as well as its technical report. They explore using supervised finetuning as well as synthetic dataset curation to train phi-4 a 14B parameter model to compete with and often outperform significanly larger models such as DeepSeek-R1-Distill-Llama-70B.

We present Phi-4-reasoning, a 14-billion parameter model supervised fine-tuned on Phi-4 [2], and Phi-4-reasoning- plus obtained by a further round of reinforcement learning. Phi-4-reasoning is trained on high-quality datasets with over 1.4M prompts and high-quality answers containing long reasoning traces generated using o3-mini. The prompts are specifically filtered to cover a range of difficulty levels and to lie at the boundary of the base model capabilities. The datasets used in supervised fine-tuning include topics in STEM (science, technology, engineering, and mathematics), coding, and safety-focused tasks

What immediately stands out to me is the approach of Supervised FineTuning (SFT) in contrast with DeepSeek R1's unsupervised Group Relative Policy Optimization (GRPO) for Phi-4 reasoning. The Phi-4 Reasoning Plus model builds on Phi-4 Reasoning by using GRPO. The magic here is really in the dataset curation and providing challenging examples to the model.

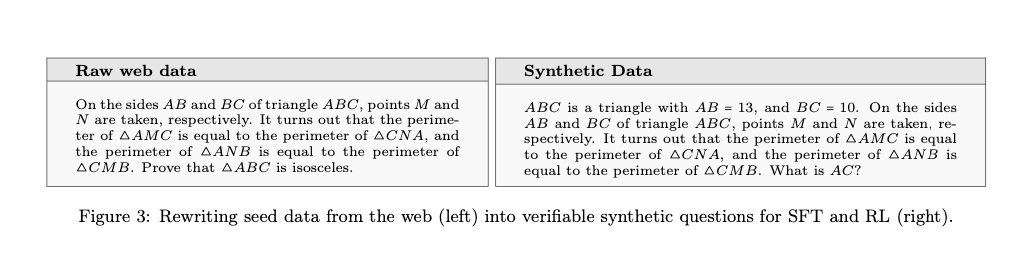

At the core of our data curation methodology is a carefully designed seed selection process. Seeds are a set of prompts or problems which are used in both supervised fine tuning for Phi-4-reasoning and reinforcement learning for Phi-4-reasoning-plus.

Because they rely heavily on synthetic data generation, they describe the initial dataset of prompts as "seeds". These are then suplemented by generating reasoning traces from a larger model. In this case they used o3-mini. They go on to rewrite some of the seed data into verifyable synthetic questions

They didn't provide any example prompts for how these were generated but this example suggests that what they are looking for are direct question -answers as opposed to formal proofs.

To promote consistent chain-of-though behavior, we trained using a reasoning- specific system message that instructed the model to enclose its reasoning traces within designated <think> and </think> tags. In our experiments, using this system message increased robustness and the consistency of chain- of-thought generation. We also experimented with partially removing and/or replacing system messages during training with other generic variants. This increased robustness under random system messages at inference time. However, when evaluated under the original reasoning message, we observed greater variability in benchmarks scores and a slight decrease in average benchmark performance.

This follows the established pattern following DeepSeek R1. They enclose reasoning tokens into a reasoning block delimited by <think> </think> tags. The system prompt in the paper is consistent with the version of the model currently deployed on ollama.

You are Phi, a language model trained by Microsoft to help users. Your role as an assistant involves thoroughly exploring questions through a systematic thinking process before providing the final precise and accurate solutions. This requires engaging in a comprehensive cycle of analysis, summarizing, exploration, reassessment, reflection, backtracing, and iteration to develop well-considered thinking process. Please structure your response into two main sections: Thought and Solution using the specified format:

{Thought section} {Solution section}. In the Thought section, detail your reasoning process in steps. Each step should include detailed considerations such as analysing questions, summarizing relevant findings, brainstorming new ideas, verifying the accuracy of the current steps, refining any errors, and revisiting previous steps. In the Solution section, based on various attempts, explorations, and reflections from the Thought section, systematically present the final solution that you deem correct. The Solution section should be logical, accurate, and concise and detail necessary steps needed to reach the conclusion. Now, try to solve the following question through the above guidelines:

The prompt seems optimized for question answer and encourages the model to always go through a full chain of thought reasoning cycle before responding with a response.

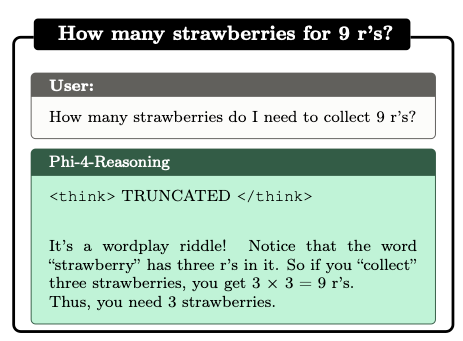

An interesting example provided was the a variation of the Strawberry problem which would appear to be a standard part of benchmarks these days.

I tested this on a few other models for reference:

- o4-mini:

Thought for 5 seconds

Each “strawberry” has 3 r’s (s-t-r-a-w-b-e-r****r-y), so to get 9 r’s you need

$\lceil 9/3\rceil = 3$ strawberries.

- Claude 3.7 Sonnet:

To determine how many strawberries you need to collect 9 letter r's, I need to analyze how many r's are in the word "strawberry."

The word "strawberry" contains 3 letter r's:

- strawberry

This means each strawberry gives you 3 letter r's.

To collect 9 letter r's in total, you would need:

9 ÷ 3 = 3 strawberries

Therefore, you need 3 strawberries to collect 9 letter r's.

- gpt-4o:

To collect 9 r's from the word strawberry, we count how many r's are in one instance of "strawberry":

strawberry contains 2 r's.

To get at least 9 r's:

$$

\left\lceil \frac{9}{2} \right\rceil = 5

$$

You need to collect 5 strawberries to get 9 r's.

- Gemini 2.5 Flash (experimental)

(It generated 4 large paragraphs of thinking I truncated)

It appears your question about collecting strawberries to get "r's" might be specific to a particular game or activity that isn't widely known. In popular games where strawberries are collectibles, such as Celeste or some Strawberry Shortcake games, they typically serve purposes like unlocking content, affecting the ending, or tracking progress, rather than being directly convertible into items or currency referred to as "r's".

Without more context about the game or activity you're referring to, it's impossible to determine how many strawberries you would need to collect to get 9 "r's".

Could you please provide more details about where you encountered this mechanic? Knowing the name of the game or the context of the activity would help in providing a more accurate answer.

Phi-4-reasoning-plus

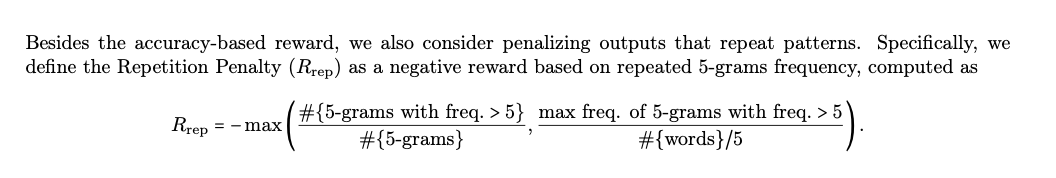

Phi-4-reasoning-plus builds on Phi-4-reasoning by incorporating a GRPO training objective. They trained exclusively on mathematical reasoning. They use a "length-aware" accuracy reward where they encourage the model to generate concise outputs when the answer is correct and use more think tokens when the answer is incorrect. They punish the model when the output is missing an end of sequence token or unclosed think blocks. They also discourage repeating patterns by taking a simple n-gram repitition penalty where n=5.

They detail their hyperparameters as

- batch size of 64 accross 32 H100s,

- Adam with a learning rate of

0.00000005 - 10 step cosine warm up

- GRPO group size of 8

- KL beta of 0.001

- Entropy coefficient of 0.001

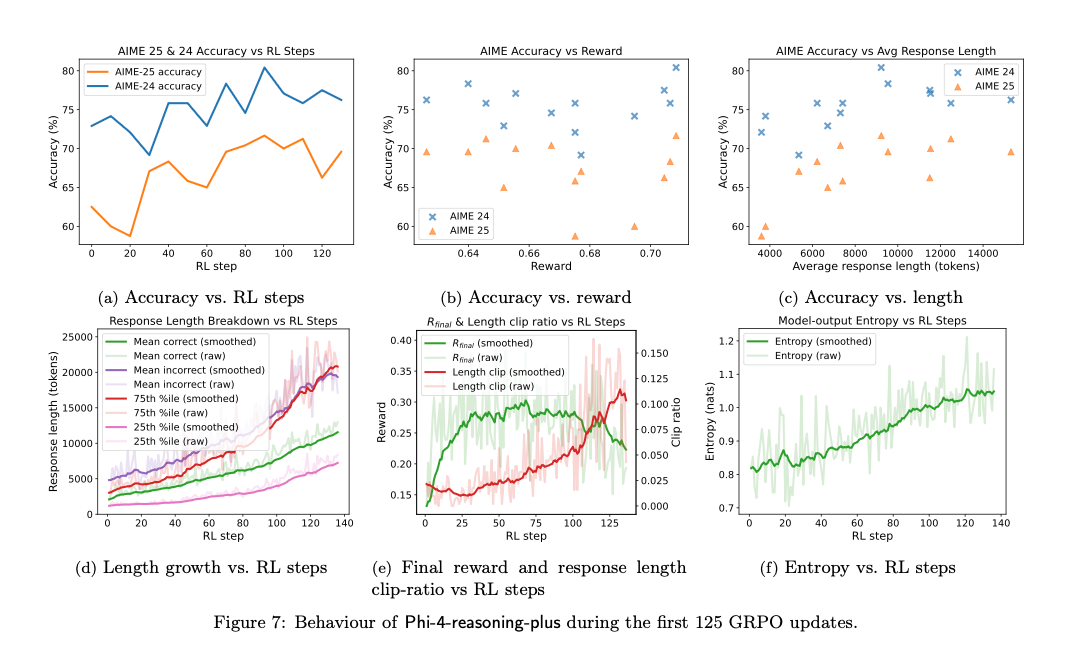

They found that perfromance plateaud after only 90 steps on the AIME benchmark. They saw a 10% increase in performance over phi-4-reasoning.

Starting from a strong SFT model, i.e., Phi-4-reasoning, additional GRPO training for only 90 steps boosts AIME performance by more than 10% (Figure 7a). Further training for more steps does not translate to additional gains, hinting the potential of an already strong SFT model is near its performance ceiling. A caveat to this observation is the fact that we clip responses beyond 31k output tokens during GRPO, which limits the extent to which GRPO can help.

In fact, further improvements to GRPO could potentially be achieved through rejection sampling based solely on response length, particularly for responses significantly exceeding the median length. As illustrated in Fig. 7d, during our training runs, responses within the bottom 25th percentile of length increase similarly to the average length of correct responses across RL iterations. In contrast, incorrect responses tend to grow in length more rapidly with each iteration, aligning closely with the 75th percentile of overall response lengths. This divergence suggests that length-based rejection sampling may enhance model efficiency by selectively moderating overly extensive, typically incorrect outputs.

This is an interesting training approach using SFT to capture the main features of the training then GRPO to min-max performance by providing simple/static reward signals.

Evaluation

To assess reasoning ability more broadly, we adopt a comprehensive suite of benchmarks from [10]. Omni- MATH [16] includes over 4000 olympiad-level problems with rigorous human annotations, covering a wide range of topics and problem types. We also include two new benchmarks, 3SAT and TSP [10] for studying the ability of models to solve NP-hard problems using symbolic and combinatorial reasoning [44, 22]. In addition, we evaluate on BA-Calendar [13], a calendar planning benchmark that requires models to find a common time slot among participants while considering constraints beyond availability, such as time zones, buffer time, priority, etc. Fi- nally, we include two spatial reasoning benchmarks: Maze and SpatialMap [56]. Maze consists of multiple choice questions such as counting the number of turns or determining the spatial relationships between two points in a given maze, and we use the 10×10 version of the benchmark.

They focused a lot of their evaluations on Math focused tasks which is unsurprising considering it was one of their training objectives.

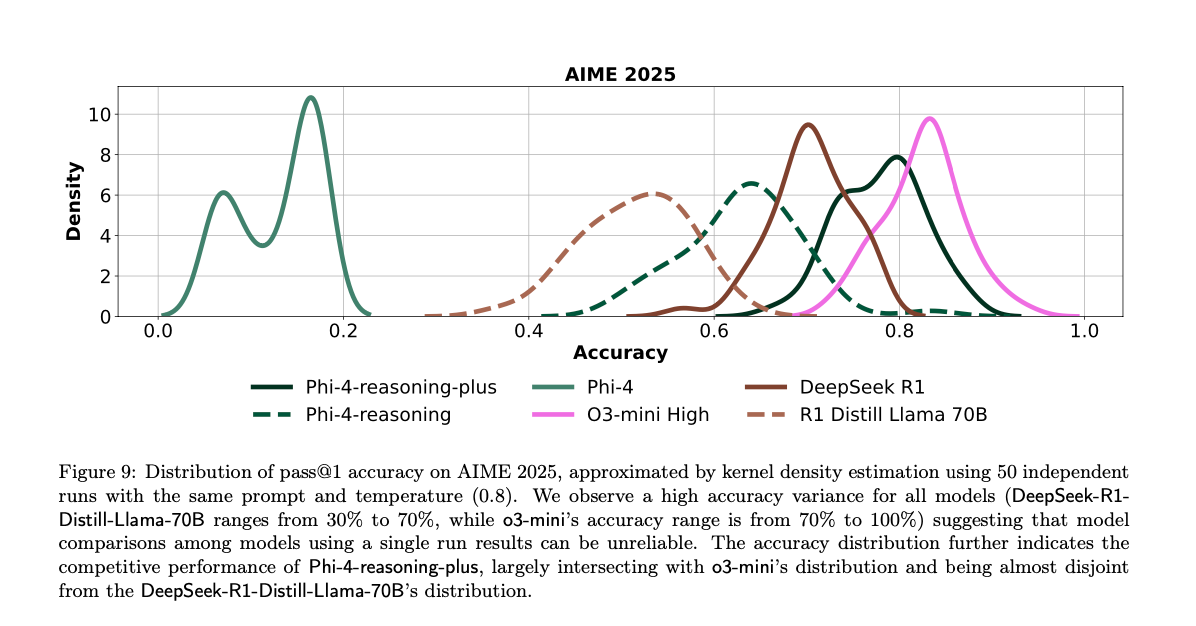

This shows that even for the same prompt and temperature settings, the outputs from the model can vary significantly during independent runs. So you're forced to run the same evaluations repeatedly and aggregate the scores to get an accurate idea of performance

However, LLMs have exhibited large generation nondeterminism, i.e., they may produce substantially different answers given the same prompts and inference parameters (such as temperature and max tokens) [9, 10, 25]. For larger non-reasoning models, nondeterminism can occur even at very low temperature (even zero), and the phenomenon is also almost always expected for reasoning models which are expected to diversify the inference paths and also highly recommended to be run at high temperatures (0.6 ∼ 1.0). Given that AIME 2025 also only contains 30 questions, nondeterminism renders the accuracy-based analysis questionable.

The size of your dataset matters a lot here too due to the non deterministic nature of the outputs and the fact that you are encouraged to run at high temperatures to allow the model to get more creative in reasoning.

Overall they note that based on their benchmarks Phi-4-reasoning outperforms DeepSeek R1 despite its size. Phi-4-reasoning-plus out performs Phi-4-reasoning and is quite competitive with 03-mini-high.