Model Collapse is an Information Degredation Problem

Model collapse has been all over the news lately citing a paper[1] that was recently published in Nature. The paper focuses on the effects of model collapse which is a phenomenon where a model trained on recursively generated data fails progressively and gets worse over time.

"We find that indiscriminate use of model-generated content in training causes irreversible defects in the resulting models, in which tails of the original content distribution disappear. We refer to this effect as ‘model collapse’ and show that it can occur in LLMs as well as in variational autoencoders (VAEs) and Gaussian mixture models (GMMs)"[1:1]

This blew up with many news outlets heralding the death of AI models as we know it[2][3][4]. While there's certainly some truth to the headlines, it misses out on some of the nuances of the problem. Given that most people aren't AI researchers and will not read the paper, I thought it would be helpful to break down the problem in a way that's more accessible. I hope to demonstrate that this is an information transmission problem not necessarily unique to AI.

Information Transmission and Degradation

"early model collapse and late model collapse. In early model collapse, the model begins losing information about the tails of the distribution; in late model collapse, the model converges to a distribution that carries little resemblance to the original one, often with substantially reduced variance."[1:2]

The paper describes two types of model collapse; Early and Late. To understand Early Model Collapse, it's helpful to imagine a game of telephone - where a message is verbally passed sequentially from participant to participant forming a long message chain.

This is an example of serial reproduction[5]. Under most circumstances (assuming a sufficiently complex message), the original message gets more distorted over time; often with hilarious results by the time the message reaches its destination[6]. While we may dismiss the game as a fun pastime, there are some interesting questions one may consider.

- Why does the message get distorted?

- Are there recognizable patterns in the distortion?

- How can we prevent distortion during transmission?

One possible explanation for the distortion is the difference in comprehension between participants. For example, if the original message describes a causality that the recipient doesn't understand, they may (consciously or otherwise) rationalize it in a way that makes sense to them before passing on the message[7]. Alternatively, they may choose to express the message in a way that they think the next person will better understand by simplifying or highlighting certain aspects of the message that they think are important. In many cases, this is due to biases held by intermediate participants. For example, the shock value of the message may be a factor in what parts of the message are preserved, omitted, or exaggerated[7:1].

All in all the chain of interpretation and re-interpretation eventually leads to a heavily degraded shadow of the original message that contains artifacts of the carier's biases.

This is also seen in the media where stories are passed from one outlet to another. To make stories more appealing, some outlets may highlight certain aspects of the story while downplaying others. The Association of Science Communicators highlighted this trend in 2021.

"Breaking scientific discoveries are distorted through a similar game of telephone. On the journey from the lab to your Facebook feed, a scientific message is morphed by a series of exaggerations and misunderstandings."[8]

LLMs and the Telephone Game

Interestingly, Language Models exhibit this behavior as well. Their training implicitly introduces biases and learned assumptions that are expressed as part of their generated output[9]. This is expressed as part of what they choose to highlight as well as the word choices they make. We can simulate this by asking a language model to summarize and re-summarize a text multiple times. Within each successive generation, the model may choose to highlight or omit different parts of the text leading to an overall degradation of the original message.

For this experiment, I selected Wikipedia's article of the day which happened to be about the Aston Martin DB9, and tasked GPT 4o Mini to summarize the text over 10 generations.

def summarize_text(text):

return chat_completion(

f"""

% Role: You are an expert editor for a car magazine.

% Task: Your role is to summarize the given text while retaining as much information as possible. Only respond with the summary and no other text

% Input: ```{text}```

"""

)

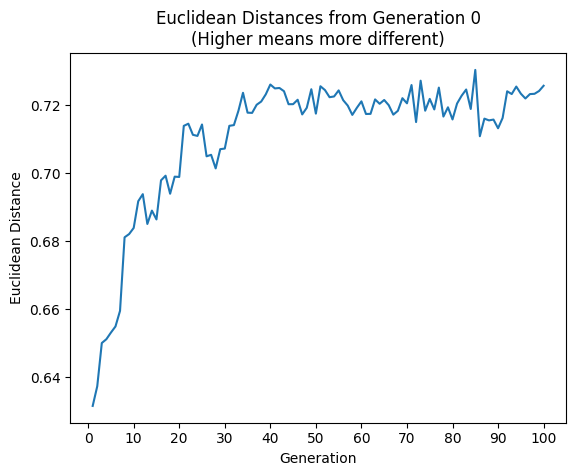

To determine how much the text changes between iterations, I calculate the Euclidean distance between the embeddings of the original text and the embeddings of the generated text at each step using text-embedding-3-small.

def embed_document(text, model="text-embedding-3-small"):

text = text.replace("\n", " ")

return client.embeddings.create(input=[text], model=model).data[0].embedding

def euclidean_distance(a, b):

return sum((a - b) ** 2 for a, b in zip(a, b)) ** 0.5

Over 100 generations we can see that the text gradually deviates from the original corpus.

Looking in detail we can see the subtle shift in language that changes the meaning of the text between Generation 1 and 2

Generation 1

The Aston Martin DB9, a grand touring car produced between 2004 and 2016, was designed by Ian Callum and Henrik Fisker. Available as both a coupe and a convertible (Volante), it succeeded the DB7 and debuted at the 2003 Frankfurt Motor Show. Built on a lightweight aluminum structure, the DB9 underwent several facelifts, with key updates in 2008, 2010, and a significant redesign in 2012 that enhanced its aesthetics and power output. The car features a 5.9-litre V12 engine generating up to 547 PS, with a 0 to 60 mph time as low as 4.4 seconds.

Aston Martin Racing adapted the DB9 for competition, resulting in the DBR9 and DBRS9 models. The DB9 has seen special editions like the DB9 LM and DB9 GT, and during its production, approximately 16,500 units were made before it was succeeded by the DB11 in 2015. The DB9 received accolades for its luxurious design, although it was also critiqued for handling compared to competitors like the Porsche 911.

---------------------------------------------------

Generation 2

The Aston Martin DB9, a grand touring car produced from 2004 to 2016, was designed by Ian Callum and Henrik Fisker and offered as a coupe and convertible (Volante). It succeeded the DB7, debuted at the 2003 Frankfurt Motor Show, and featured a lightweight aluminum structure. The DB9 underwent several updates, including major redesigns in 2008, 2010, and 2012, enhancing its aesthetics and increasing its 5.9-litre V12 engine output to 547 PS, achieving 0 to 60 mph in 4.4 seconds. Adapted for racing, it spawned the DBR9 and DBRS9 models, along with special editions like the DB9 LM and DB9 GT, totaling around 16,500 units produced before being succeeded by the DB11 in 2015. The DB9 was praised for its luxurious design but faced criticism for its handling compared to rivals like the Porsche 911.

Overall they express the same idea however the devils are in the details here.

"with key updates in 2008, 2010, and a significant redesign in 2012 that enhanced its aesthetics and power output."

Suggests that design changes in 2008 and 2010 were important however 2012 had a major redesign. In Generation 2 the text was rephrased to

"including major redesigns in 2008, 2010, and 2012,"

Which I interpret as suggesting that all redesigns were major which was not the case in the original text. (Notably 2008 and 2010 were dropped by the 4th Generation.)

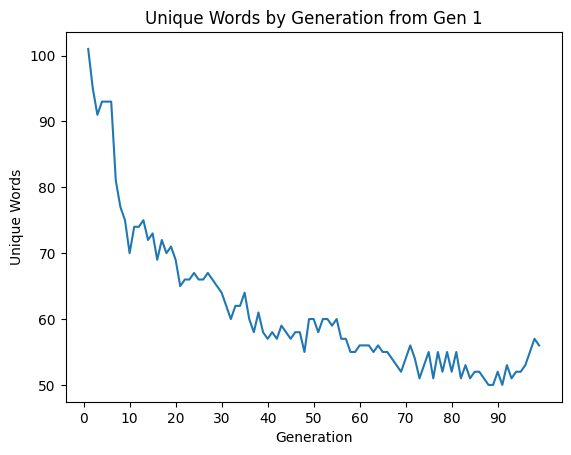

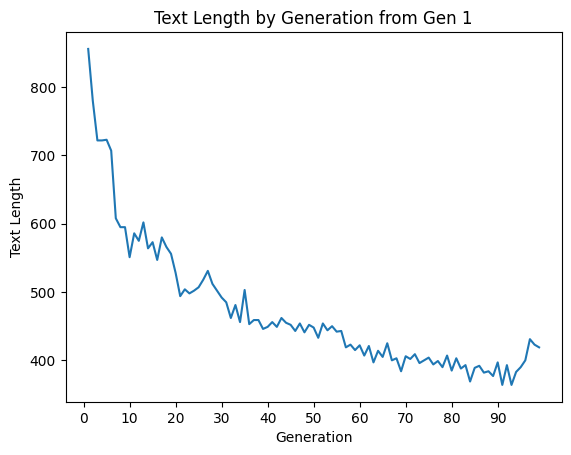

This trend continues over the 100 generations which we can see by tabulating the overall text lengths and unique words used in each generation.

Further examining the output in later generations reveals what the model biases may have learned to highlight.

Generation 95

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum successor to the DB7, produced from 2004 to 2016 as a coupé and convertible. A 2012 facelift introduced the DB9 LM and DB9 Zagato Spyder Centennial. Despite facing handling critiques, it achieved motorsport success and was succeeded by the DB11 in 2016, with around 16,500 units manufactured.

---------------------------------------------------

Generation 96

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum successor to the DB7, produced from 2004 to 2016 in coupé and convertible forms. A 2012 facelift introduced the DB9 LM and DB9 Zagato Spyder Centennial. While it faced handling critiques, it achieved motorsport success and was succeeded by the DB11 in 2016, with approximately 16,500 units produced.

---------------------------------------------------

Generation 97

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum successor to the DB7, produced from 2004 to 2016 as a coupé and convertible. It underwent a 2012 facelift that included the DB9 LM and DB9 Zagato Spyder Centennial. Despite facing handling critiques, the DB9 achieved motorsport success and was succeeded by the DB11 in 2016, with around 16,500 units produced.

---------------------------------------------------

Generation 98

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum successor to the DB7, produced from 2004 to 2016 in both coupé and convertible forms. It received a facelift in 2012, leading to variants like the DB9 LM and DB9 Zagato Spyder Centennial. Despite some handling critiques, the DB9 enjoyed motorsport success and was succeeded by the DB11 in 2016, with approximately 16,500 units manufactured.

---------------------------------------------------

Generation 99

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum successor to the DB7, produced from 2004 to 2016 in coupé and convertible forms. It underwent a facelift in 2012 and had variants such as the DB9 LM and DB9 Zagato Spyder Centennial. While it faced some handling critiques, the DB9 achieved motorsport success and was succeeded by the DB11 in 2016, with around 16,500 units produced.

---------------------------------------------------

Generation 100

The Aston Martin DB9, designed by Ian Callum and Henrik Fisker, was a lightweight aluminum replacement for the DB7, manufactured from 2004 to 2016 in coupé and convertible styles. It received a facelift in 2012 and had variants like the DB9 LM and DB9 Zagato Spyder Centennial. Despite some handling critiques, it found motorsport success and was succeeded by the DB11 in 2016, with approximately 16,500 units produced.

---------------------------------------------------

Notice that at this stage a lot of information has been lost, the message still contains factual elements but contains some distorted reinterpretations and there's very much less variance between outputs. This is an example of what the paper describes as early model collapse.

"... More often than not, we get a cascading effect, in which individual inaccuracies combine to cause the overall error to grow. For example, overfitting the density model causes the model to extrapolate incorrectly and assigns high-density regions to low-density regions not covered in the training set support; these will then be sampled with arbitrary frequency."[1:3]

What this means is that If we were to train on the example above the model would converge on the distorted message as its ground truth leading to a model that is unable to generate accurate outputs. In the late collapse scenario, the output would likely converge on noise.

How do we mitigate this?

Now that we understand how and why information gets distorted, the natural question is how to prevent and fix the issue. The most obvious and likely answer to me is better data curation, by evaluating the quality of training data that's used to train a model, we can ensure that the right amount of information is present in the data which accurately reflects the various perspectives that the model may need to generate accurate outputs.

This is actually how the Microsoft Phi family of models are trained partly on synthetic data.

"We introduce phi-1, a new large language model for code, with significantly smaller size than competing models: phi-1 is a Transformer-based model with 1.3B parameters, trained for 4 days on 8 A100s, using a selection of ``textbook quality" data from the web (6B tokens) and synthetically generated textbooks and exercises with GPT-3.5 (1B tokens). Despite this small scale, phi-1 attains pass@1 accuracy 50.6% on HumanEval and 55.5% on MBPP. It also displays surprising emergent properties compared to phi-1-base, our model before our finetuning stage on a dataset of coding exercises, and phi-1-small, a smaller model with 350M parameters trained with the same pipeline as phi-1 that still achieves 45% on HumanEval."

This is a non-trivial task that requires a lot of manual effort but can be automated to some extent. For example, using an existing model to classify data for information density and educational value[10].

Another solution that the original paper proposes; could be to create a chain of data provenance where LLM-generated data is separated from human-generated data. This would allow for the model to be trained on a mix of data that is more likely to be accurate and prevent the model from feeding on LLM-generated data.

"The need to distinguish data generated by LLMs from other data raises questions about the provenance of content that is crawled from the Internet: it is unclear how content generated by LLMs can be tracked at scale. One option is community-wide coordination to ensure that different parties involved in LLM creation and deployment share the information needed to resolve questions of provenance."[1:4]

This may be possible with tools such as Google's SynthID which Google claims can be used to detect generated content across modalities

"Identification: SynthID can scan images, audio, text or video for digital watermarks, helping users determine if content, or part of it, was generated by Google’s AI tools."

Conclusion

While Model Collapse is a real problem, I think it's an information transmission issue as opposed to an issue unique to AI. It's an avoidable one with the right tools and techniques. I don't think this spells the end of AI as many outlets would like us to believe but I do think it creates a new challenge for people looking to train models for the first time. Given that degredation of information is an artifact of information transfer and exchange, how do we ensure quality and information provenance in a world where most data are nth-generation accounts? Particularly in a world where the rate of data creation will greatly outpace our ablility to curate it as a result of AI-generated content.

Shumailov, I. (2024) AI models collapse when trained on recursively generated data. Available at: https://www.nature.com/articles/s41586-024-07566-y (Accessed: 2024-7-27). ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

Corden, J. (2024) Study: AI "inbreeding" may cause model collapse for tools like ChatGPT, Microsoft Copilot. www.windowscentral.com. Available at: https://www.windowscentral.com/software-apps/study-ai-incest-may-cause-model-collapse-for-tools-like-chatgpt-microsoft-copilot (Accessed: 2024-7-27). ↩︎

Mulligan, SJ. (2024) AI trained on AI garbage spits out AI garbage. Available at: https://www.technologyreview.com/2024/07/24/1095263/ai-that-feeds-on-a-diet-of-ai-garbage-ends-up-spitting-out-nonsense/ (Accessed: 2024-7-27). ↩︎

Griffin, A. (2024) AI systems could collapse into nonsense, scientists warn. Available at: https://www.independent.co.uk/tech/ai-chatgpt-artificial-intelligence-nonsense-b2586279.html (Accessed: 2024-7-27). ↩︎

Meylan, S. (2014) The telephone game: Exploring inductive biases in naturalistic language use. Available at: https://escholarship.org/uc/item/0862v8jz (Accessed: 2024-7-27). ↩︎

If you enjoy games like this I recommend giving https://garticphone.com/ a try with your team. It's a lot of fun and illustrates the problem in an interactive way. ↩︎

Breithaupt, F. (2018) Fact vs. Affect in the telephone game: All levels of surprise are retold with high accuracy, even independently of facts. Available at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6255933/ (Accessed: 2024-7-27). ↩︎ ↩︎

ASC (2021) Science in the media: A game of telephone. www.associationofsciencecommunicators.org. Available at: https://www.associationofsciencecommunicators.org/2021/science-in-the-media-a-game-of-telephone/ (Accessed: 2024-7-27). ↩︎

Perez, J. (2024) When LLMs play the telephone game: Cumulative changes and attractors in iterated cultural transmissions. Available at: http://arxiv.org/abs/2407.04503 (Accessed: 2024-7-27). ↩︎

Gunasekar, S. (2023) Textbooks are all you need. Available at: http://arxiv.org/abs/2306.11644 (Accessed: 2024-7-27). ↩︎